Autonomous Vehicles

CAVS is on the forefront of autonomous mobility research. Our primary focus? Developing solutions for non-urban environments.

Off-road, industrial, and heavy-duty vehicle automation are among the last frontiers of autonomous mobility, and CAVS is combining our unique capabilities to be a leader in these fields. With top-rated high-performance computing capabilities and one-of-a-kind vehicle proving grounds, CAVS is able to validate advanced modeling and simulation developments in real-world situations. CAVS also has full-suite capability for autonomous system development, including sensor research, artificial intelligence and vehicle robotization.

Proving Ground

In autonomous vehicle development, designs for unstructured, off-road environments can be very different than those designed for structured, on-road applications. A typical on-road deep learning system must focus on identifying cars, pedestrians and traffic signals, and are built to respond to highway markings, such as signage and lane markings. In contrast, off-road autonomous vehicles must be able to detect trees, rocks, abrupt changes in elevation and other obstacles. Active sensors, such as LiDAR, also respond differently to organic materials, like foliage, than to man-made materials, like steel and glass.

The CAVS vehicle proving grounds, located on 55 acres of land adjacent to the CAVS building, provides controlled-access testing capabilities for both autonomous vehicles and vehicle mobility in an off-road environment. The proving grounds feature various terrains, including sand, rocks, tall grass, wooded trails and lowlands. Varying courses provide transition points between different terrain and lighting scenarios. Future improvements to the site include hard-surface test capabilities, such as four-lane roads, entrance and exit ramps, and a general use concrete and asphalt pad.

Sensor Data Processing and Fusion

The sensors that give an autonomous vehicle its view of the surrounding world are one of its most critical components. CAVS researchers are working with various sensors, including LiDAR, multispectral cameras, radar, ultrasonic, and others, to investigate the following factors:

- How to best apply each sensor technology

- How to filter and process the vast amounts of data that is generated

- How to fuse together data from different sensor types

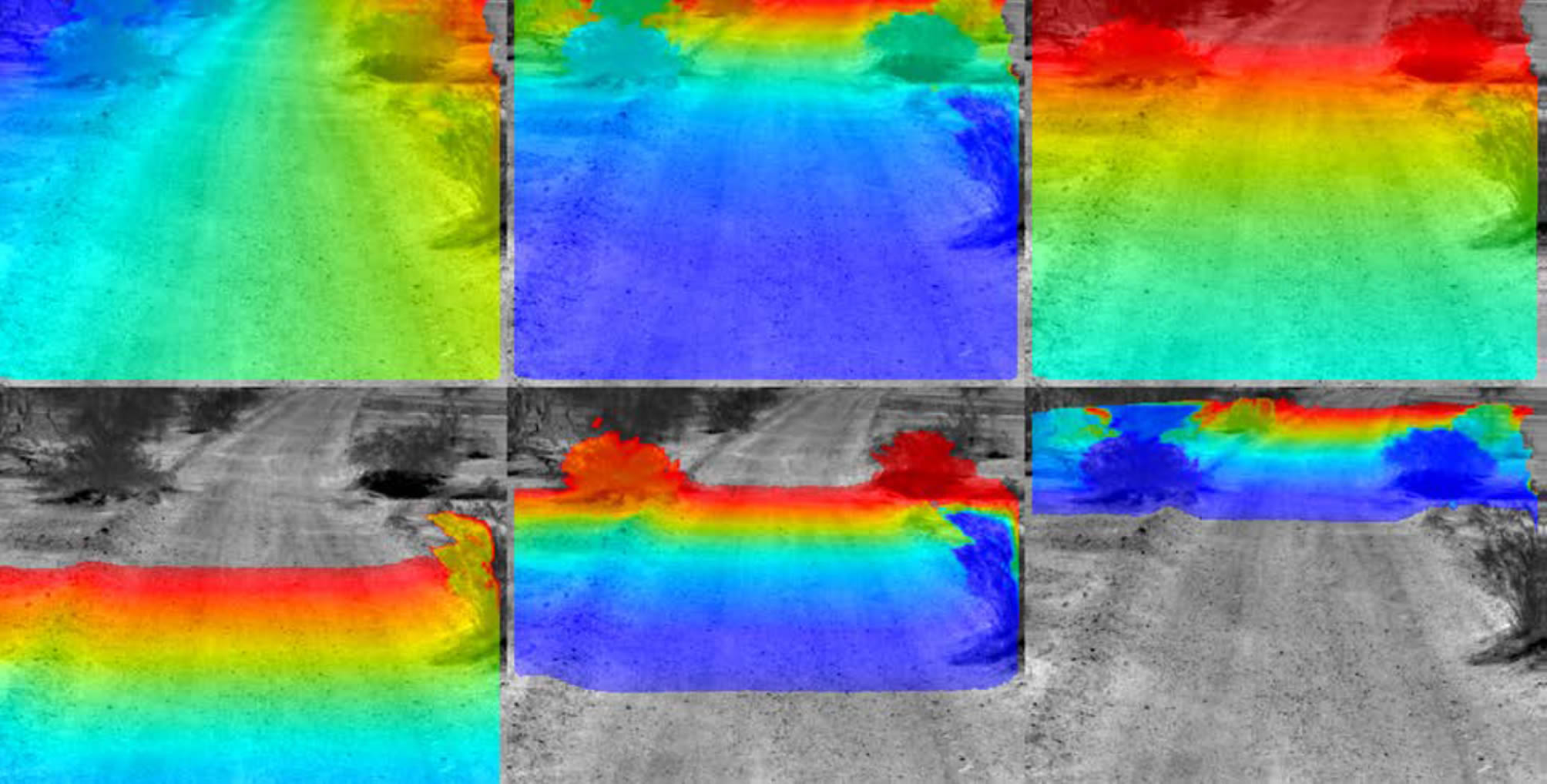

Three dimensional scene reconstruction from a single infrared camera on a ground vehicle. Images are color coded. Blue equals smaller and red represents larger values of “depth images” for different standoff distances from the vehicle. Infrared sensors Detection of buried explosive hazards in infrared imagery. Top: Features (histogram of local binary patterns) extracted from the raw imagery. Bottom: Features extracted from a filtered image.

Deep Learning

As artificial intelligence continues to drive the development of autonomous vehicles, the use of practical, real-time deep learning algorithms has also been on the rise. CAVS is leading the way in developing deep learning technology, with teams of faculty, research engineers and students using neural networks to identify objects from camera images. While working with industry leaders like NVIDIA, CAVS is specifically exploring deep learning for use in rural environments.

Training neural networks requires extensive data sets and human interaction to manually classify objects. By using simulation, this cycle time can be reduced. This is a critical feature for off-road applications, since there is more variety in natural obstacles, like trees, than in man-made objects.